Web Scraping

Quick and secure data

scraping

with Octo Browser

Web scraping

and multi-accounting

browsers

Web scraping is an automated process of collecting large amounts of online data. In marketing and product design, it is used to analyze the market and monitor competitors’ prices.

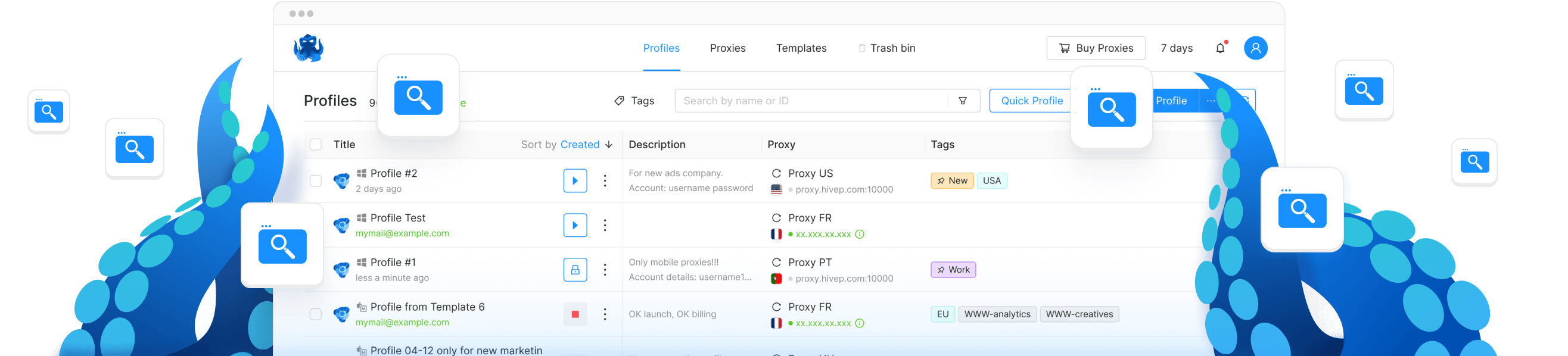

Most popular websites and platforms actively protect their data from being scraped by tracing IP addresses, checking User Agent, system languages, and using other identification methods. Octo Browser is superior to common scripts or scrapers, as online platforms treat Octo profiles as regular users visiting a website and provide all data without restrictions.

Valuable data online is commonly protected against being scraped. It is not only about checking HTTP headers or IP addresses, which are easily changed with proxies. Web fonts, extensions, cookie files, and other digital fingerprint parameters are also monitored. In such cases using Octo Browser becomes vital to securely collect data, as Octo uses digital fingerprints of real devices that don’t raise suspicions of websites’ defensive systems.

The most common reason for bans is misconfigured automation. Don’t send a large number of queries from the same IP address, as such IP addresses will very quickly end up blacklisted. It’s better to use multiple rotating proxies, while limiting query frequency from each IP address to safe numbers. Even if you encounter a ban that is resistant to proxy rotation, Octo Browser allows you to completely spoof traceable parameters of your digital fingerprint and continue collecting data.

Join Octo Browser now